Be My Eyes: How GPT-4 and Other Neural Networks Aid the Blind and Visually Impaired

Published on 27 September 2023

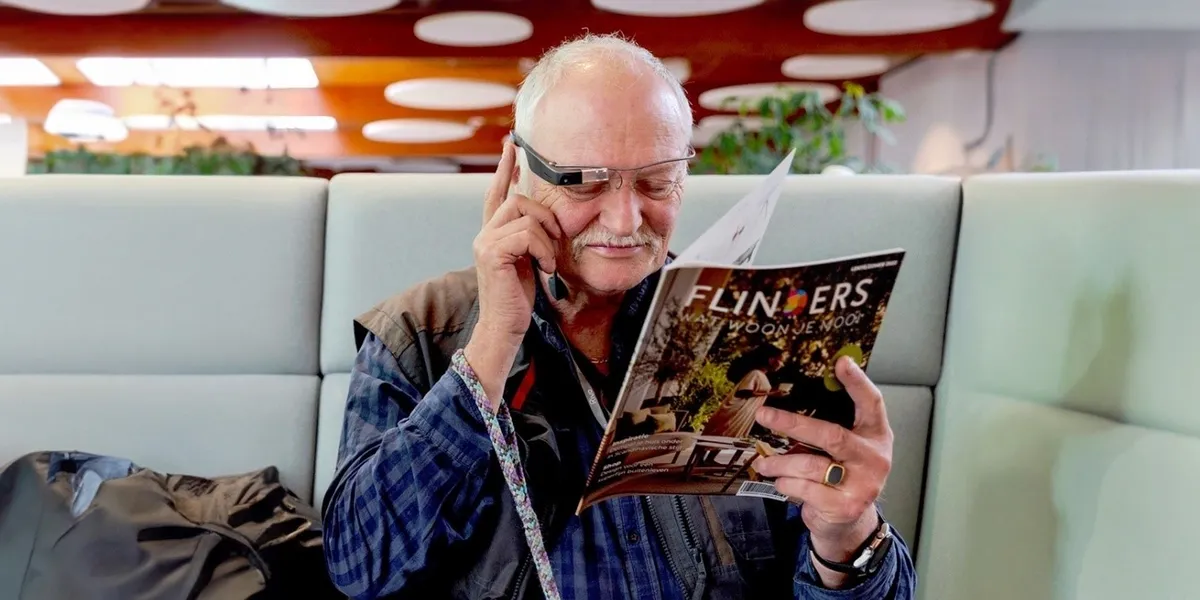

Smart glasses, miniature cameras, and smartphone apps are striving to replace traditional walking sticks and guide dogs for the blind and visually impaired.

Artificial intelligence technologies are not solely for entertainment; they are also transforming industries. They are instrumental in coding, diagnosing X-rays, and monitoring crops. However, their reach extends even further. Today, neural networks are empowering the development of digital assistants designed to enhance the lives of the blind and visually impaired.

Machine vision, driven by neural networks, can recognize text, objects, and faces, translating this information into accessible formats, such as voice or vibrations, for the visually impaired. In this article, we will explore popular apps and gadgets tailored to the visually impaired and assess whether they can truly serve as substitutes for human vision.

Seeing AI: Microsoft's "Talking Camera"

The Microsoft Seeing AI project stands as a trailblazing mobile application designed for individuals with visual impairments. Launched seven years ago, this program continues to receive updates and remains highly popular among users.

What can Seeing AI accomplish? When the camera is activated, the app can recognize and audibly describe:

- Information about the surrounding objects, including their location, size, and other characteristics.

- Details about people, including their gender, approximate age, actions, and facial expressions. If a contact's photo is saved in the phone, the app can even announce their name.

- Text, whether handwritten or printed in documents.

- Product names, determined via barcodes.

- Object colors and the brightness of lighting.

- The denomination of paper money.

The program can also analyze photos and images, providing users with insights into their contents. Essentially, the features of Seeing AI have laid the foundation for numerous similar applications, which we will discuss in more detail later.

Why Has Seeing AI Been So Successful?

One key reason behind the success of Seeing AI is the presence of blind programmers on the application's development team who possess a profound understanding of the needs of visually impaired individuals. Notably, the development team is led by software engineer Saqib Sheikh, who lost his eyesight at the age of seven.

For years, Sheikh had a vision of a smart "talking camera" capable of verbally describing his surroundings, yet no such solutions existed. In response, he pursued a career in software development and took it upon himself to create such a program.

Saqib Sheikh joined Microsoft in 2006, initially contributing to the improvement of AI services, including the Cortana voice assistant and Bing internet search engine. By the mid-2010s, the rise of smartphones and advancements in computer vision technologies converged, allowing for the development of a functional "talking camera."

Simultaneously, Microsoft organized the Deep Vision hackathon to identify top talents in machine learning and computer vision. Over 13,000 individuals from across the globe participated, with four of them eventually joining Saqib Sheikh's team within the corporation.

"I first conceived this project during my university years. While discussing new ideas in the dorm, I mentioned, 'We should create glasses with a camera that can observe our surroundings and describe them audibly.' However, technology at that time couldn't make this vision a reality.

In 2014, Microsoft hosted its inaugural hackathon, and I revisited this idea, presenting it to competition participants. The initial prototypes were rudimentary, struggling with tasks like facial recognition and basic functions. Nevertheless, we collaborated with the corporation's leading scientists in the research department.

Deep learning technologies, algorithms, and cloud computing continuously evolved. Eventually, we succeeded in developing a program that could describe the content of photos, marking a true breakthrough."

— Saqib Sheikh, Microsoft Software Engineer, Project Manager of Seeing AI

The functional version of Seeing AI emerged in 2016, with the public iOS release taking place in July 2017. In the initial six months post-launch, the application facilitated over three million different tasks for people with visual impairments.

Currently, Seeing AI harnesses the capabilities of Azure Cognitive Services to provide textual descriptions of images, read aloud text, and more. In 2021, the app's algorithms received an upgrade through Visual Vocabulary (VIVO) technology, based on the Transformer neural network model.

As a result, the updated version of the program demonstrated twice the accuracy on images from the nocaps test set compared to its predecessor. Now, Seeing AI not only identifies objects in front of the smartphone camera (e.g., "A man and a cat") but also elucidates their interactions (e.g., "A man is petting a cat").

Seeing AI has garnered numerous awards, including the prestigious Helen Keller Award from the American Foundation for the Blind. Additionally, the Society for Blind and Visually Impaired users of Apple products recognized Seeing AI as the best application for three consecutive years (from 2017 to 2019).

Recommended News

Stay updated with our latest articles, industry insights, and expert tips to keep your business informed and inspired.

In the world of high-level programming languages, names like Python, Java, and C...

Learn More

When it comes to space exploration and rocket science, precision is paramount. T...

Learn More

Python and SQL Lead the Rankings, but Don't Disregard Older LanguagesIEEE Spectr...

Learn More